Welcome to the New Relic logging best practices guide. Here you'll find in-depth recommendations for how to optimize our logs features and manage data consumption.

팁

Got lots of logs? Check out our tutorial on how to optimize and manage them.

Forwarding logs

Here are some tips on log forwarding to supplement our log forwarding docs:

When forwarding logs, we recommend using our New Relic infrastructure agent and/or APM agents. If you cannot use New Relic agents, use other supported agents (like FluentBit, Fluentd, and Logstash).

Here are some Github example configurations for supported logging agents:

중요

If your logs are stored in a directory on an underlying host/container and are instrumented by our infrastructure agent to collect logs, you may see duplicate logs collected by both the infrastructure agent and agent. To avoid sending duplicate logs, see our recommendations in this guide.

Add a

logtypeattribute to all the data you forward. The attribute is required to use our built-in parsing rules and can also be used to create custom parsing rules based on the data type. Thelogtypeattribute is considered a well known attribute and is used in our quickstart dashboards for log summary information.Use our built-in parsing rules for well-known log types. We will automatically parse logs from many different well-known log types when you set the relevant

logtypeattribute.Here's an example of how to add the

logtypeattribute to a log forwarded by our infrastructure agent:logs:- name: mylogfile: /var/log/mylog.logattributes:logtype: mylogUse New Relic integrations for forwarding logs for other common data types such as:

- Container environments: Kubernetes (K8S)

- Cloud provider integrations: AWS, Azure, or GCP

- Any of our other supported on-host integrations with logging

Data partitions

If you are or are planning to consume a significant amount of log data each day, you should definitely work on an ingest governance plan for logs, including a plan to partition the data in a way that gives functional and thematic groupings. You can gain significant performance improvements with the proper use of data partitions. If you send all your logs to one giant "bucket" (the default log partition) in a single account, you could experience slower queries or failed queries since you'll have to scan all logs in your account to return results for each query. For more details, see NRQL query rate limits.

One way to improve query performance is by limiting the time range being searched. Searching for logs over long periods of time will return more results and require more time. Avoid searches over long time windows when possible, and use the time-range selector to narrow searches to smaller, more specific time windows.

Another way to improve search performance is by using data partitions. Here are some best practices for data partitions:

Make sure you use partitions early in your logs onboarding process. Create a strategy for using partitions so that your users know where to search and find specific logs. That way your alerts, dashboards, and saved views don't need to be modified if you implement partitions later in your logs journey.

Create data partitions that align with categories in your environment or organization that are static or change infrequently (for example, by business unit, team, environment, service, etc.).

Create partitions to optimize the number of events that must be scanned for your most common queries. If you have a high ingest volume, you'll have more events in shorter time windows which cause searches over longer time periods to take longer and potentially time out. There isn't a hard and fast rule, but generally "as scanned" log events gets over 500 million (especially over 1 billion). For common queries, you may want to consider adjusting your partitioning.

Even if your ingest volume is low, you can also use data partitions for a logical separation of data, or just to improve query performance across separate data types.

To search data partitions in the Logs UI, you must select the appropriate partition(s), open the partition selector and check the partitions you want to search. If you're using NRQL, use the

FROMclause to specify theLogorLog_<partion>to search. For example:FROM Log_<my_partition_name> SELECT * SINCE 1 hour agoOr to search logs on multiple partitions:

FROM Log, Log_<my_partition_name> SELECT * SINCE 1 hour ago

Securing log data access

When working with log data that may contain sensitive information, it's important to control who can access specific logs. Here are best practices for securing your log data:

Use data access control: Implement data access control policies to restrict which users can view specific log partitions. This is especially important when logs contain personally identifiable information (PII), security data, or business-sensitive information.

Be aware of data exposure risks: Certain capabilities can bypass data access restrictions. Review potential data exposure risks and follow these best practices:

- Limit who has Modify access to the Data partitions rules capability, as this allows users to reconfigure partitions and potentially access restricted data.

- Restrict Modify access to the Query keys capability to trusted administrators only, as query keys can bypass user-level data access restrictions.

- Rotate or delete legacy Insights API keys that don't respect data access policies.

- Create custom roles without alert management capabilities for users who shouldn't access sensitive data through alert conditions or notifications.

Align partitions with access control: When planning your partition strategy, consider which teams should have access to which logs. Group logs by team, service, or sensitivity level to make access control management easier.

Parsing logs

Parsing your logs at ingest is the best way to make your log data more usable by you and other users in your organization. When you parse out attributes, you can easily use them to search in the Logs UI and in NRQL without having to parse data at query time. This also allows you to use them easily in and dashboards.

For parsing logs we recommend that you:

Parse your logs at ingest to create

attributes(or fields), which you can use when searching or creating and alerts. Attributes can be strings of data or numerical values.Use the

logtypeattribute you added to your logs at ingest, along with other NRQLWHEREclauses to match the data you want to parse. Write specific matching rules to filter logs as precisely as possible. For example:WHERE logtype='mylog' AND message LIKE '%error%'Use our built-in parsing rules and associated

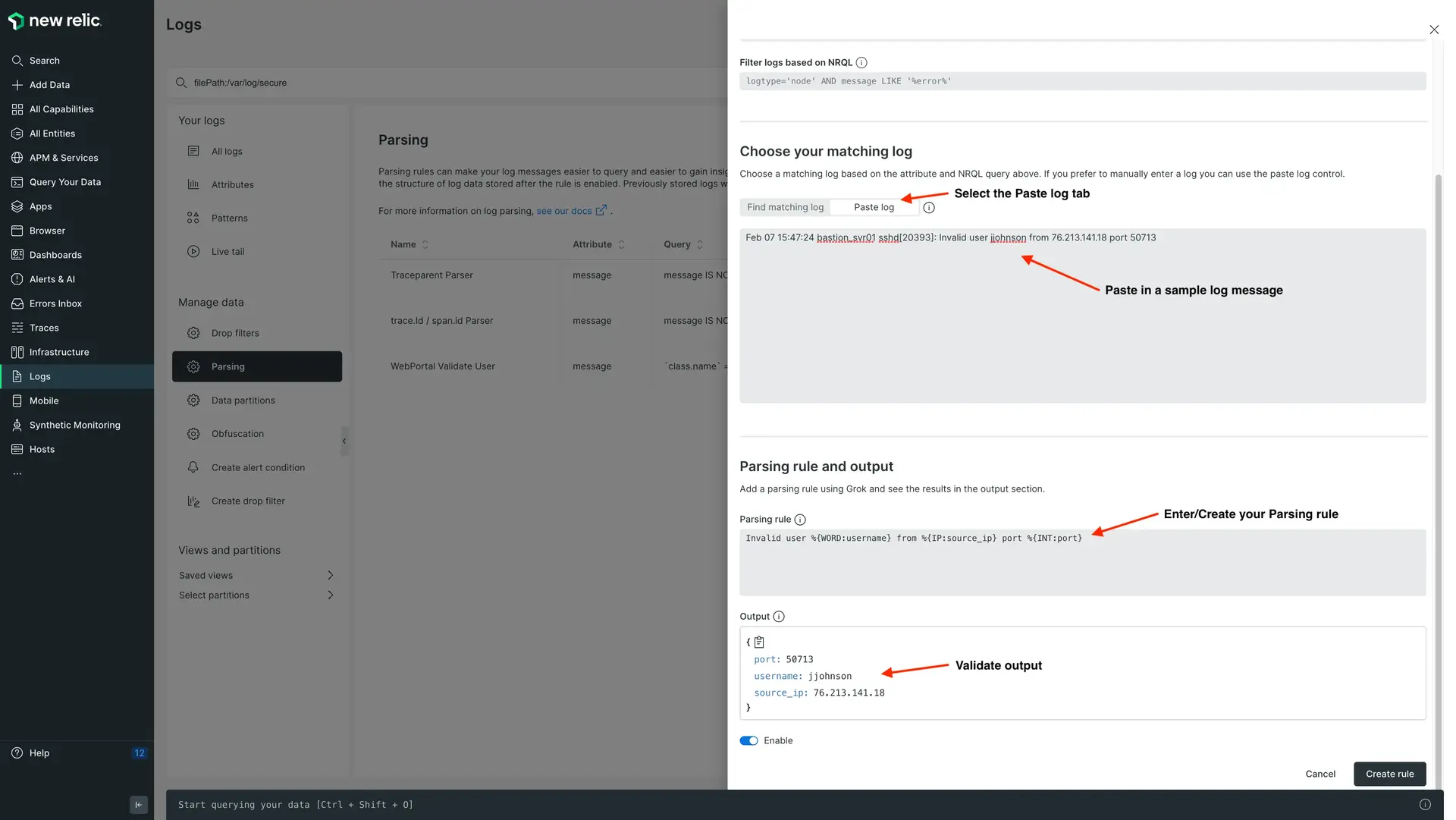

logtypeattribute whenever possible. If the built-in rules don't work for your data, use a differentlogtypeattribute name (i.e.,apache_logsvsapache,iis_w3c_customvsiis_w3c), and then create a new parsing rule in the UI using a modified version of the built-in rules so that it works for your log data format.Use our Parsing UI to test and validate your Grok rules. Using the

Paste logoption, you can paste in one of your log messages to test your Grok expression before creating and saving a permanent parsing rule.

Use external FluentBit configurations for parsing multi-line logs and for other, more extensive pre-parsing before ingesting into New Relic. For details and configuration of multi-line parsing with our infrastructure agent, see this blog post.

Create optimized Grok patterns to match the filtered logs to extract attributes. Avoid using expensive Grok patterns like GREEDYDATA excessively. If you need help identifying any sub-optimal parsing rules, talk to your New Relic account representative.

GROK best practices

- Use Grok types to specify the type of attribute value to extract. If omitted, values are extracted as strings. This is important especially for numerical values if you want to be able to use NRQL functions (i.e.,

monthOf(),max(),avg(),>,<, etc.) on these attributes. - Use the Parsing UI to test your Grok patterns. You can paste sample logs in the Parsing UI to validate your Grok or Regex patterns and if they're extracting the attributes as you expect.

- Add anchors to parsing logic (i.e.,

^) to indicate the beginning of a line, or$at the end of a line. - Use

()?around a pattern to identify optional fields - Avoid overusing expensive Grok patterns like

'%{GREEDYDATA}. Try to always use a valid Grok pattern and Grok type when extracting attributes.

Drop filter rules

Drop logs at ingest

- Create drop filter rules to drop logs that are not useful or that are not required to satisfy any use cases for dashboards, alerts or troubleshooting

Drop attributes from your logs at ingest

- Create drop rules to drop unused attributes from your logs.

- Drop the

messageattribute after parsing. If you parse the message attribute to create new attributes from the data, drop the message field. - Example: If you're forwarding data from AWS infrastructure, you can create drop rules to drop any AWS attributes that may create unwanted data bloat.

New Relic logs sizing

- How we bill for storage may differ from some of our competitors. How we meter for log data is similar to how we meter and bill for other types of data, which is defined in Usage calculation.

- If our cloud integrations (AWS, Azure, GCP) are installed, we will add cloud metadata to every log record, which will add to the overall ingest bill. This data can be dropped though to reduce ingest.

- The main drivers for log data overhead are below, in order of impact:

- Cloud integrations

- JSON formatting

- Log patterns (You can disable/enable patterns in the Logs UI.)

Searching logs

For common log searches, create and use Saved views in the UI. Create a search for your data and click + Add Column to add additional attributes to the UI table. You can then move the columns around so that they appear in the order you want, then save it as a saved view with either private or public permissions. Configure the saved views to be public so that you and other users can easily run common searches with all relevant attribute data displayed. This is good practice for 3rd-party applications like apache, nginx, etc. so users can easily see those logs without searching.

Use the query builder to run searches using NRQL, utilizing its advanced functions. To query logs from multiple accounts and identify them with their corresponding account IDs, include

accountId() as accountIdin theSELECTstatement of your NRQL query.Create or use available quickstart dashboards to answer common questions about your logs and to look at your log data over time in time series graphs. Create dashboards with multiple panels to slice and dice your log data in many different ways.

Use our advanced NRQL functions like capture() or aparse() to parse data at search time.

Install the Logs analysis and/or APM logs monitoring quickstart dashboards to quickly gain more insight into your log data. To add these dashboards go to one.newrelic.com > Integrations & Agents > Logging > Dashboards.

What's next?

See Get started with .