Building, deploying, and monitoring generative AI applications is complex. This is where NVIDIA and New Relic can provide a streamlined path for developing, deploying, and monitoring AI-powered enterprise applications in production.

NVIDIA NIM is a set of inference microservices that provides pre-built, optimized LLM models that simplify the deployment across NVIDIA accelerated infrastructure, both in the data center and cloud.

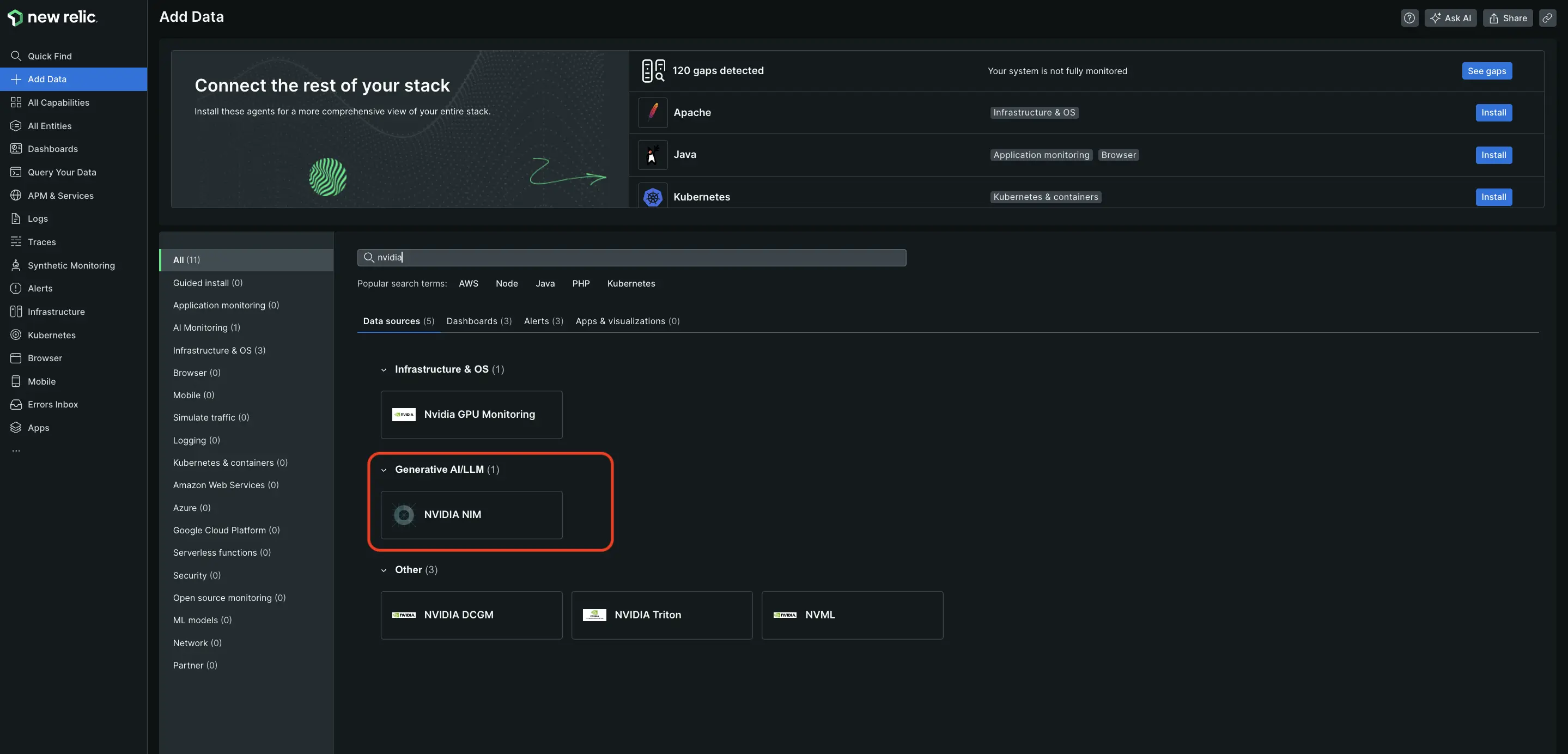

New Relic AI monitoring now integrates with NVIDIA NIM to help engineers quickly troubleshoot and optimize the performance, quality, cost of AI applications, ultimately helping organizations adopt AI faster and achieve quicker ROI.

- Full AI stack visibility: Spot issues faster with a holistic view across apps, NVIDIA GPU-based infrastructure and AI layer.

- Deep trace insights for every response: Fix performance and quality issues like bias, toxicity, and hallucinations by tracing the entire lifecycle of AI responses

- Model inventory: Easily isolate model-related performance, error, and cost issues by tracking key metrics across all NVIDIA NIM inference microservices in one place

- Model comparison: Compare the performance of NVIDIA NIM inference microservices running in production in a single view to optimize model choice.

- Enhanced data security: In addition to NVIDIA’s self-hosted model’s security advantage, New Relic allows you to exclude monitoring of sensitive data (PII) in your AI requests and responses